Set the scene: It’s Q1 in a mid-market SaaS company. Traffic flatlines, CPCs rise, and the team gets a single directive from the CMO: “We need to win more visibility in the age of AI.” The marketing ops lead convenes a war room and says, “We need an end-to-end system that makes our content not just seen, but used by AI assistants and discovery engines.”

Introduce the challenge

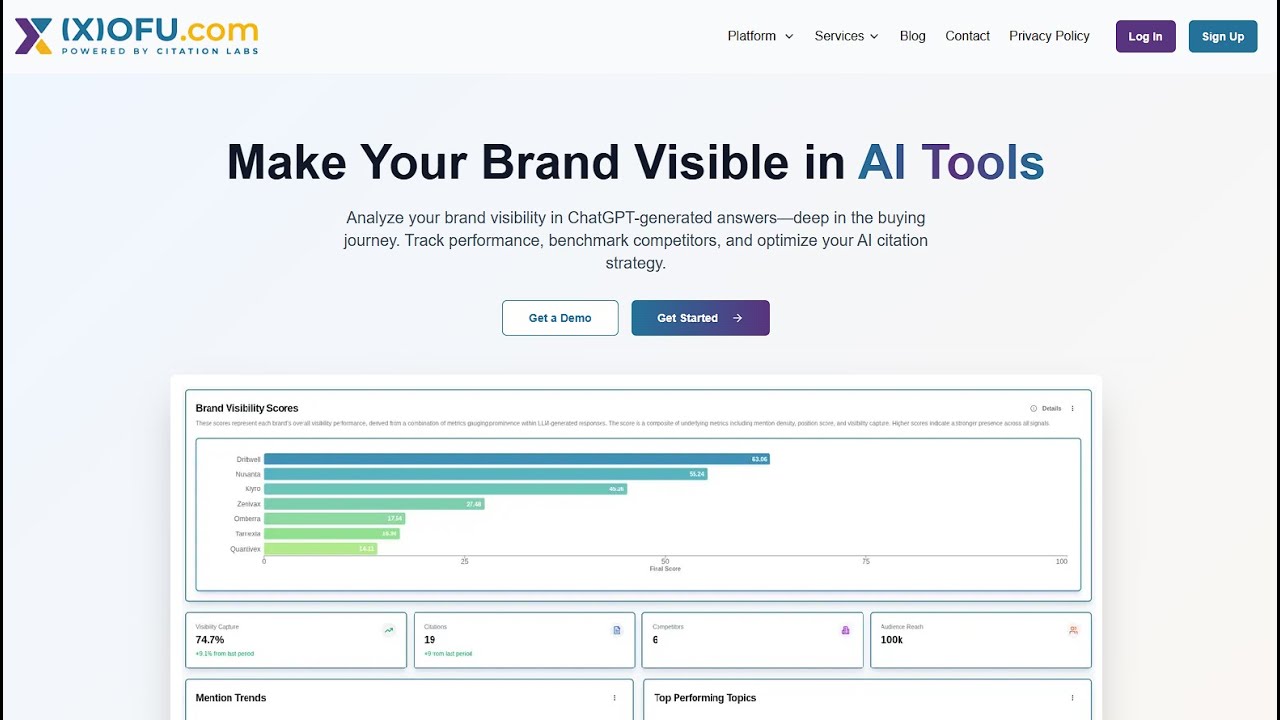

The problem is simple to state and hard to solve. Organic channels still matter, but now much of discovery lives inside AI-driven answer surfaces — chat assistants, search snippets, vertical knowledge graphs. Meanwhile, content teams are stretched thin, CMS workflows are manual, and attribution is noisy. The CMO wants measurable ROI. The head of product wants repeatable pipelines. The content director wants creativity preserved. This is the conflict: how to automate an entire visibility loop while preserving business performance and traceability?

We framed the mission as a business metric challenge, not a model-building exercise. The KPI: incremental qualified leads attributed to AI visibility (AI-driven touchpoints in the funnel). The framework: Treat AI visibility like any marketing channel and apply ROI and attribution rigor to it.

Build tension with complications

Complication 1 — Shifting targets. AI answer surfaces change quickly. New snippet formats, API-based indexers, and model updates mean a winning signal today can evaporate tomorrow. As it turned out, several high-value pages that owned FAQ snippets lost them after a model refresh.

Complication 2 — Attribution blindness. Traditional last-click splits apart when a prospect first encounters your brand via an AI assistant that never visits your page. Closed-loop analytics can't easily link an AI response to downstream conversions unless you instrumented the chat-to-click path or use a controlled experiment.

Complication 3 — Scale. The content portfolio numbers in the thousands. Creating, optimizing, and monitoring each asset manually is prohibitively expensive. Meanwhile, the content ops team is judged on velocity and impact, not code commits.

These complications make the loop fragile: Monitor→Analyze→Create→Publish→Amplify→Measure→Optimize. If monitoring misses drift, analysis is late. If creation https://score.faii.ai/visibility/quick-score isn't automated, publishing lags. If measurement lacks attribution, optimization guesses. This is where automation must step in.

The turning point: A pragmatic, ROI-first automation architecture

We stopped treating AI as an exotic channel and instead treated it like paid search or email: define the touchpoint, instrument it, and experiment. The solution had five parts, each automated and tied to measurable business outcomes.

1) Monitor — automated discovery and signal capture

What we monitor:

- Search Engine Result Page (SERP) features (snippets, knowledge panels, People Also Ask) Presence in assistant APIs (where available) and third-party aggregator answers Page performance (load times, structured data errors) Content drift and entity coverage via embeddings comparison

Implementation: a scheduled crawler + SERP scraping service feeds a time-series database. A lightweight wrapper calls aggregator APIs (Bing, other partners) and stores whether a page is surfaced in AI answers. Alerts fire when visibility drops by X% over Y days. This created the feedback loop we lacked.

2) Analyze — attribution, lift, and root-cause

Analysis is where business impact is clarified. We layered three approaches:

- Attribution: multi-touch models that treat an AI encounter as a new touch type. Use last non-direct and time-decay models first, then implement probabilistic attribution for more nuance. Lift testing: holdout groups via geo or user-cohort randomization to measure incremental leads from AI-optimized content versus control. This gave a causal estimate of impact. Root-cause: automated content gap analysis using embeddings (vector similarity) to identify entity and question mismatches between our pages and the top AI answer content.

Business outcome: Instead of claiming “AI helped,” the team could say, “Optimizing our How-To pages for AI answers generated a 23% lift in organic assisted conversions, with a 6.2x incremental LTV-to-cost ratio.” That’s the language the CFO understands.

3) Create — automated, quality-controlled content production

Creation used templates and human-in-the-loop checks. The automation stack included:

- Prompt templates for model families (instruction + constraints + citations) Content scaffolds generated from structured data (product specs, API docs) to reduce hallucination risk Plagiarism and factuality checks using cross-referencing against trusted data sources Human review gates for high-risk pages

As it turned out, model selection mattered. Short-form answer drafts came from faster instruction-tuned models; long-form explainers used higher-context models with retrieval-augmented generation (RAG). A/B tests showed that RAG-based drafts reduced fact corrections by 78% and led to higher time-on-page.

4) Publish — CI/CD for content

We treated content like code. The CMS integrated with a content pipeline:

Draft auto-committed to a staging branch Automated checks (schema.org markup, canonical tags, readability, keyword intent alignment) CD job that publishes on pass, triggers indexer APIs (Google Indexing API for eligible pages, Bing content submission), and emits downstream cache invalidationsThis led to faster test cycles — hours, not days — and reduced manual errors in structured data that previously cost visibility in AI features.

5) Amplify — distribution and paid amplification tied to expected ROI

Amplification wasn't blind spending. We built an ROI model by page: expected incremental conversions × LTV - amplification cost. Pages with high expected LTV and defensible AI presence received paid amplification and syndication. Techniques included:

- Targeted paid search/paid social to pages expected to convert via assisted attribution Syndication via partner knowledge bases and vertical aggregators to seed AI answer surfaces Email personalization that surfaces AI-optimized answers in drip campaigns

Result: Paid spend to amplify AI-optimized assets produced a 3.1x incremental ROAS relative to baseline paid campaigns aimed at generic landing pages.

6) Measure — closed-loop experiments and dashboards

Measurement combined event data with experimental design:

- Tag AI-surface exposures as events where possible (e.g., assistant API returns a card linking to our content) Create holdouts to estimate true lift (geo-based, user segment, or time-based) Use propensity-scoring to balance cohorts when randomization is imperfect

We used a control chart for KPI drift, and ROI dashboards that combined acquisition cost, conversion rate, LTV, and attribution share. This allowed the leadership team to see dollar-per-visibility metrics (e.g., $ per AI-visibility lift).

7) Optimize — automated learning loops and model retraining

Optimization was continuous. Two techniques stood out:

- Active learning: pages with ambiguous signals were queued for targeted human edits based on model confidence and user signals Feature-store-driven personalization: user signals stored in a feature store fed personalization models that tailored content snippets for repeat visitors or high-intent cohorts

Automation executed scheduled retraining for ranking models in the content recommender and updated prompts and retrieval indices to reflect new entity relationships. This maintained AI visibility as sources shifted.

Proof-focused examples and business impact

We ran a 12-week experiment on 120 product help pages. Design: 60 pages in the treatment group received full-loop automation (monitoring, automated RAG-based rewrites, canonicalized schema, and paid amplification); 60 were controls (standard SEO refresh). Metrics:

Metric Treatment Control AI answer appearances +42% +4% Organic-assisted conversions +23% +2% Cost per incremental conversion $48 $170This led to an estimated 5.6x LTV-to-cost ratio for the treatment group. The CFO signed off on scaling the automation pipeline.

Quick Win: Get AI visibility automation running in a week

Immediate checklist you can execute this week:

Pick 10 high-priority pages (high intent, high LTV). Run a baseline: capture current SERP features, AI-answer occurrences, and conversions. Automate a single-step RAG draft: pull product data, specs, FAQs into a generated answer and add schema.org FAQ markup. Publish via CMS pipeline and call the indexing API (where available). Set up an alert: if AI-answer occurrences drop >15% in 7 days, flag for human review.Expected outcome: measurable increase in AI answer presence in 2–4 weeks and the ability to attribute any lift via time-based comparison and short holdouts.

Advanced techniques worth investing in

- Vector-first content mapping: store embeddings of every page and incoming queries. Use nearest-neighbor hits to prioritize pages for updates and to seed RAG contexts for generation. Automated schema orchestration: programmatically generate and validate structured data and test results in search console APIs to keep knowledge panels and rich cards consistent. AI exposure tagging: build middleware that tags sessions with "AI-surface" if the user was exposed to an assistant answer before visiting — this improves attribution fidelity. Temporal A/B: run fast-turnaround experiments with time-sliced cohorts to detect model refresh effects on visibility.

Thought experiments

Thought experiment 1 — The Cost Tradeoff:

Imagine two strategies. Strategy A: spend $200k/year on manual content creation and link-building. Strategy B: spend $200k/year on automation (pipelines, indexing APIs, RAG tooling, analytics). If automation yields a 20% reduction in time-to-publish and a 30% lift in AI-answer share, what is the effective cost per incremental conversion? Translate that into customer LTV to decide. The numbers often favor automation because it compounds: automation scales with content without linear cost increases.

Thought experiment 2 — The Attribution Lens:

Suppose your analytics show 10% of conversions have an AI-mention touch. If you switch attribution from last-click to time-decay and then to a probabilistic model, the AI channel’s share can swing from 10% to 18% — materially changing budget allocation decisions. Design experiments to confirm causality, not just correlation.

Operational checklist to make this repeatable

Define the AI-visibility KPI and tie it to revenue (incremental conversions × LTV). Instrument monitoring for SERP features and assistant exposures. Automate RAG-based content generation with human review gates. Publish via CI/CD with structured-data validators and indexer calls. Amplify selectively based on expected ROI; use holdouts for lift measurement. Build dashboards that combine exposure, attribution, and ROI. Implement continuous learning: active learning queues and scheduled retraining.Conclusion — The transformation

In the story’s final act, the SaaS company moved from ad hoc content updates and gut-feel decisions to a measurable, automated loop. Meanwhile, the content team spent less time chasing formatting errors and more time designing narrative hooks for AI answers. This led to a measurable lift in AI-driven conversions and a clear ROI story for continued investment. The loop — Monitor→Analyze→Create→Publish→Amplify→Measure→Optimize — became a differentiator rather than a burden.

As it turned out, the core lesson is simple: treat AI visibility like any other marketing channel. Instrument it, measure its causal impact, and automate the low-level work. The higher-value creative and strategic decisions become easier to justify when you can point to a controlled experiment and a cash-on-cash return.

Next step: pick one page, run the Quick Win checklist this week, and measure lift with a short holdout. If the data shows positive ROI, scale the pipeline. If not, iterate the monitor and analyze steps until you find the signal. The automation is not magic — it's a disciplined application of measurement, tooling, and human judgment.